Deepmind company, which gained fame with its development in the field of artificial intelligence and building neural networks, capable of playing computer and board games at the human level, introduced the project alphasode , a developing machine learning system for code generating capable of participating in programming competitions Platform CodeForces and demonstrate the average result. The key feature of the development is the ability to generate code in Python or C ++ languages, taking text on the entrance to the task in English.

To test the system, 10 new CodeForces competitions with more than 5,000 participants spent after the completion of the training model of machine learning were selected. After completing the task, the AlphaSode system entered the middle of the ranking of these competitions (54.3%). The predicted overall rating of AlphaSode amounted to 1238 balls, which ensures entry into Top 28% among all participants, at least once participating in competitions over the past 6 months. It is noted that the project is still at the initial stage of development and in the future it is planned to improve the quality of the generated code, as well as develop AlphaSode in the direction of systems that help in writing code, or tools for developing applications that people will be able to use without programming skills.

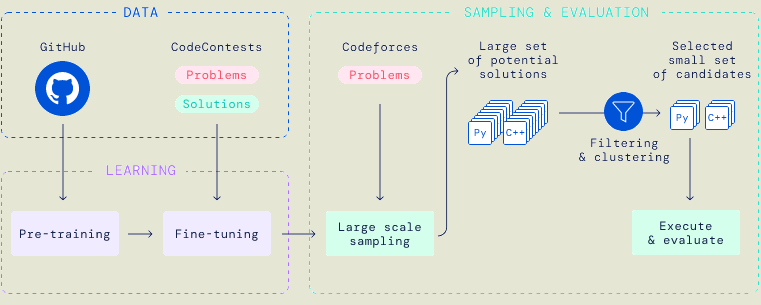

The project uses the architecture of the neural network “ TRANSFORMER ” in combination with segment and filtering methods allowing you to generate various unpredictable code options corresponding to the text in the natural language. After filtering, clustering and ranking from the formable stream of options, the most optimal working code is selected, which is then checked for obtaining a right result (in each task of the competition, an example of input data is specified and the result that must be obtained after executing the program).

For rough training of machine learning system used code base available in GitHub public repositories. After preparing the initial model, the model optimization phase was performed, implemented on the basis of Code Collections