Carol Herbst (Karol Herbst) from Red Hat, involved in the development of Mesa, the Nouveau driver and the OpenCL open stack, suggested a cluda driver implementing the API for inclusion in Mesa href=”https://docs.mesa3d.org/gallium/index.html”>Galliumon top of the CUDA API provided by the proprietary NVIDIA driver. Gallium is used in Mesa to abstract driver development and implement driver-specific software interfaces that are not specific to individual hardware devices. In the context of cluda, computing-related interfaces are implemented that are sufficient to implement the OpenCL specification on top of CUDA.

Cluda is expected to help solve problems using OpenCL on top of the proprietary NVIDIA driver. The use of additional binding makes it possible to implement the missing OpenCL extensions that are missing in the NVIDIA stack based on a proprietary driver. cluda uses only the capabilities of the libcuda.so library, which is part of the NVIDIA GPU drivers and is not tied to CUDA runtime. In its current form, the cluda-based OpenCL implementation supports memory operations and allows running compute kernels.

The cluda- and rusticl-based OpenCL implementation supports the following OpenCL extensions that are not present in the NVIDIA implementation:

- cl_khr_extended_bit_ops

- cl_khr_integer_dot_product

- cl_khr_fp16

- cl_khr_suggested_local_work_size

- cl_khr_subgroup_extended_types

- cl_khr_subgroup_ballot

- cl_khr_subgroup_clustered_reduce

- cl_khr_subgroup_non_uniform_arithmetic

- cl_khr_subgroup_non_uniform_vote

- cl_khr_subgroup_rotate

- cl_khr_subgroup_shuffle

- cl_khr_subgroup_shuffle_relative

- cl_khr_il_program (Yes, that means SPIR-V support)

- cl_khr_spirv_linkonce_odr

- cl_khr_spirv_no_integer_wrap_decoration

- cl_khr_spirv_queries

- cl_khr_expect_assume

- cl_ext_immutable_memory_objects

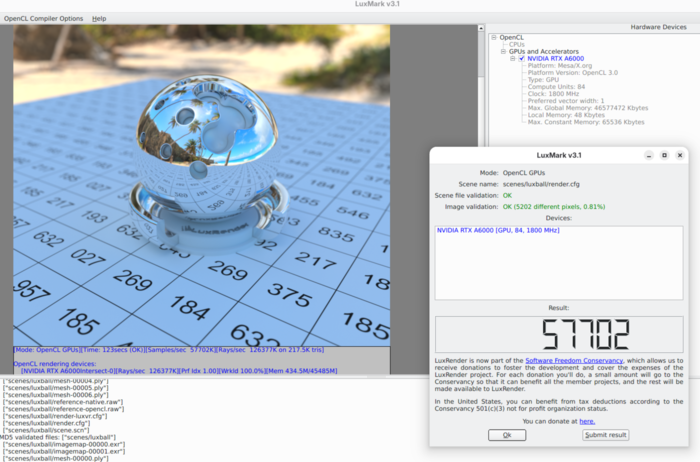

When measuring performance in the LuxMark 3.1 test suite the OpenCL implementation based on Mesa, cluda and rusticl showed 57702 points, and the implementation based on the NVIDIA stack – 64009 points. The performance drop is due to the overhead of converting the Mesa NIR intermediate representation to CUDA PTX (Parallel Thread Execution). Possible optimizations that could reduce the performance gap include increased use of vectorization and the use of JIT compilation.