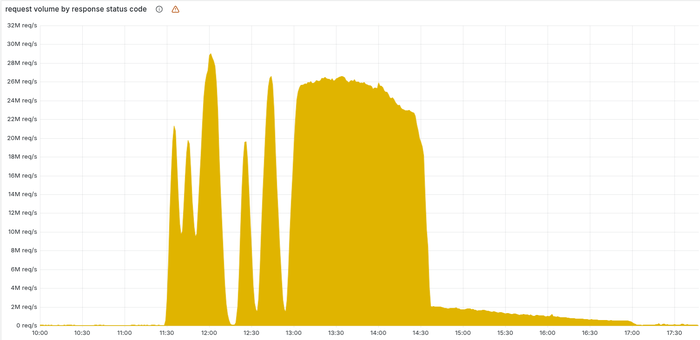

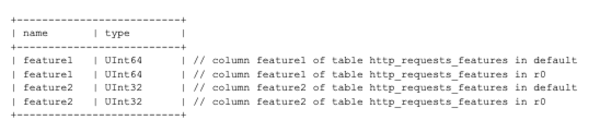

Cloudflare published an analysis of one of the largest incidents in its infrastructure, due to which yesterday a large part of the content delivery network was inoperative for more than 3 hours. The failure occurred after a change in the structure of the database located in the ClickHouse repository, after which the file with parameters for the anti-bot system doubled in size. Duplicate tables were formed in the database, despite the fact that the SQL query for generating the file simply displayed all the data from all tables by key, without eliminating duplicates.

SELECT name, type FROM system.columns WHERE table = ‘http_requests_features’ order by name;

The created file was distributed to all nodes of the cluster processing input requests. In the handler that uses this file to check for requests from bots, the parameters specified in the file were stored in RAM and, to protect against excessive memory consumption, the code provided a limit on the maximum allowable file size. Under normal conditions, the actual file size was significantly less than the set limit, but after duplicating the tables it exceeded the limit.

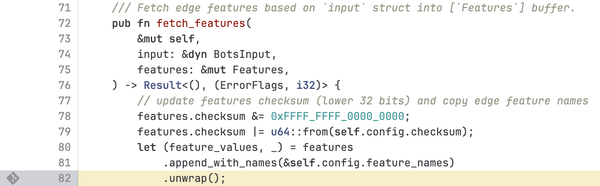

The problem turned out to be that instead of correctly processing the exceeding of the limit and continuing to use the previous version of the file and informing the monitoring system about an emergency situation, an abnormal termination was triggered in the handler, which blocked further traffic forwarding. The error was caused by using the unwrap() method with type Result in Rust code.

When the Result value is in the “Ok” state, the unwrap() method returns the object associated with this state, but if the result is not successful, the call crashes (the “panic!” macro is called), . Typically unwrap() is used during debugging or when writing test code and is not recommended for use in production projects.